Understanding probability distributions in deep learning is crucial for building reliable AI systems. A groundbreaking ICLR 2024 study provides new insights into these complex structures, particularly in computer vision applications.

The research tackles key challenges in analyzing high-dimensional parameter spaces. With thousands of variables, traditional methods struggle to capture meaningful patterns. This work makes significant progress by addressing weight-space symmetries through innovative techniques.

Researchers released the first large-scale checkpoint dataset, enabling deeper investigation into model behavior. This resource helps quantify uncertainty more accurately while improving robustness in practical scenarios.

Findings challenge conventional wisdom about regularization effects and reveal surprising multi-modal characteristics. The study opens new possibilities for interpreting complex probability landscapes in advanced machine learning architectures.

Understanding Bayesian Neural Network Posteriors

Modern AI systems rely on probabilistic reasoning to handle uncertainty effectively. Unlike traditional models, Bayesian neural networks treat weights as probability distributions, offering deeper insights into predictions. This approach transforms how we interpret complex data patterns.

Definition and Core Concepts

The posterior distribution represents possible network weights after observing data. It combines prior beliefs with evidence, encoding both parameters and epistemic uncertainty. This duality makes it invaluable for robust AI.

Two key challenges emerge: permutation and scaling symmetries. Permutation symmetries create duplicate solutions when nodes are reordered. Surprisingly, scaling symmetries don’t inherently produce extra modes but link to L2 regularization effects.

Why Posteriors Matter in BNNs

Accurate posteriors directly improve uncertainty quantification. Ignoring weight-space symmetries leads to flawed analysis, affecting real-world applications. Traditional training methods miss this nuance, often overfitting to single solutions.

Multi-modality, caused by permutation symmetries, reveals diverse valid configurations. Understanding these structures helps build more reliable models. The ICLR 2024 findings highlight how regularization interacts with posterior shapes, opening new research directions.

What Are Bayesian Neural Network Posteriors Really Like?

Modern machine learning relies on understanding complex probability landscapes. The latest research provides fresh perspectives on how these structures behave in high-dimensional spaces.

Modes and Multi-Modality in the Posterior

Equivalent weight configurations create multiple peaks in probability distributions. These modes represent equally valid solutions to the learning problem.

Permutation symmetries cause exact duplicates in the parameter space. The study analyzed 3,072 models to quantify this effect systematically.

Weight-Space Symmetries and Their Impact

Two fundamental symmetries shape the posterior landscape. Permutation symmetries produce identical outputs when nodes are reordered.

Scaling symmetries show surprising connections to L2 regularization. Unlike permutations, they don’t inherently create extra modes but influence their formation.

Uncertainty Quantification Through Posteriors

Accurate probability distributions enable better risk assessment. The research demonstrates how posterior structure affects predictive confidence.

Markov Chain Monte Carlo sampling faces challenges in these complex spaces. However, proper understanding of multi-modality improves Bayesian model averaging outcomes.

Practical Insights into BNN Posteriors

Practical implementation of probabilistic models requires deeper structural insights. The ICLR 2024 study bridges theory and application, offering tools to analyze real-world scenarios. Below, we explore visualization techniques, applications, and hurdles.

Visualizing High-Dimensional Posteriors

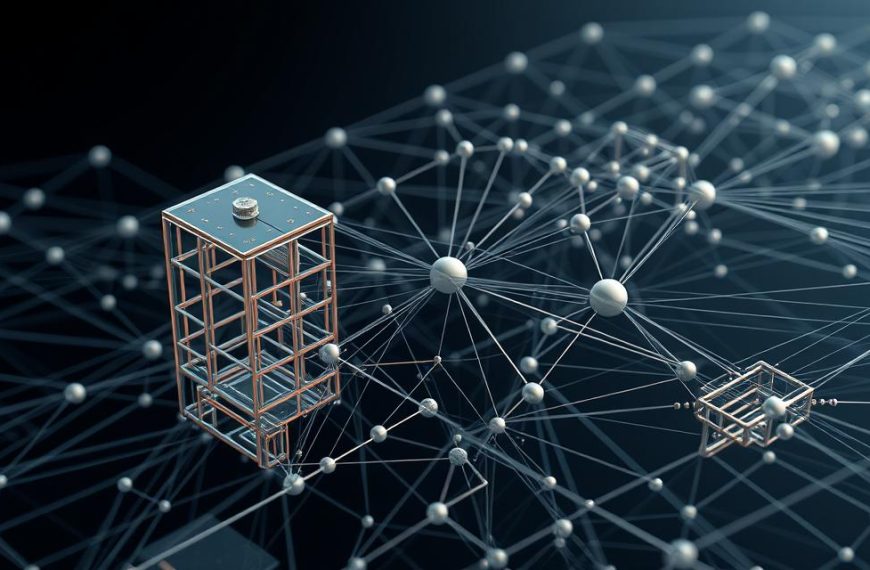

Dimensionality reduction simplifies complex probability landscapes. Techniques like t-SNE and PCA reveal clusters in the 3,072-model dataset. These patterns highlight equivalent solutions caused by permutation symmetries.

Case studies demonstrate multi-modal distributions in medical imaging. For example, tumor detection models show distinct weight configurations with similar accuracy. This redundancy improves fault tolerance.

Real-World Applications and Challenges

Autonomous systems leverage posterior uncertainty for safer decisions. Variational inference balances speed and accuracy, while MCMC offers precision at higher computational costs.

| Method | Speed | Accuracy | Use Case |

|---|---|---|---|

| Variational Inference | Fast | Moderate | Real-time systems |

| MCMC | Slow | High | Medical diagnostics |

Scalability remains a key challenge. The open-source code repository aids reproducibility, but large-scale deployments need optimized architectures. Future research may focus on hybrid approximation methods.

Conclusion

New research transforms how we analyze uncertainty in advanced learning models. The study reveals multi-modal structures and weight-space symmetries that redefine reliability in machine learning systems.

Accurate posterior analysis improves predictive confidence, especially in high-stakes applications. The released dataset accelerates progress in probabilistic AI, offering tools for deeper exploration.

Practical implementation requires addressing scalability and symmetry effects. For robust results, consider hybrid methods like variational inference or MCMC, depending on use-case demands.

Future work must refine visualization tools and theoretical frameworks. This foundation empowers next-gen models to balance accuracy with interpretability, advancing trustworthy AI.

FAQ

How do Bayesian neural networks handle uncertainty?

These models use posterior distributions to capture uncertainty, allowing predictions with confidence intervals instead of fixed outputs.

Why are posteriors in BNNs often multi-modal?

Multi-modality arises from weight-space symmetries and diverse solutions fitting the data equally well, reflecting complex optimization landscapes.

What challenges exist in visualizing high-dimensional posteriors?

Due to the vast parameter space, techniques like dimensionality reduction or marginal distributions are used to interpret results effectively.

How do weight symmetries affect posterior analysis?

Symmetries create duplicate modes, complicating inference but can be addressed with techniques like random restarts or advanced sampling methods.

Where are Bayesian neural networks applied in real-world scenarios?

They excel in medical diagnosis, autonomous systems, and finance—domains where quantifying uncertainty is critical for decision-making.