Modern machine learning systems rely on complex structures to process information. At their core, these systems transform raw data into meaningful insights through specialized components.

Consider a plant identification app. When you snap a photo, the system doesn’t recognize leaves instantly. Instead, it breaks down visual patterns through multiple processing stages. Each stage extracts increasingly sophisticated features from the image.

These processing stages form the backbone of deep learning architectures. The most powerful systems contain hundreds of interconnected nodes that refine data progressively. This multi-stage approach enables computers to handle tasks ranging from speech recognition to medical diagnosis.

Since the 1950s, researchers have enhanced artificial intelligence by stacking these processing units. Today’s advanced models demonstrate how layered designs outperform simpler alternatives. The architecture continues evolving as scientists push computational boundaries further.

Introduction to Hidden Layers in Neural Networks

Between the starting and ending points of AI models lies a powerful transformation mechanism. These intermediate components, known as hidden layers neural architectures rely on, reshape raw data into actionable insights. Without them, systems would merely mirror inputs, failing to recognize patterns or make decisions.

Defining Hidden Layers

Hidden layers are mathematical processing stages sandwiched between the input layer and output layer. Each layer contains neurons that apply weights, biases, and activation functions to incoming data. For example, a 4-node layer can expand 4 input parameters into 21 transformed values, enabling complex feature detection.

Modern convolutional neural networks often stack 300+ neurons per hidden layer. This depth allows hierarchical learning—early layers detect edges, while deeper ones identify objects or speech patterns.

Why Hidden Layers Matter

Without these intermediate stages, models could only perform linear input output mappings. Hidden layers introduce nonlinearity, letting systems learn intricate relationships. Google’s visualizations demonstrate how a single hidden layer can model curved decision boundaries impossible for linear regression.

However, adding layers trades computational efficiency for accuracy. Designers balance depth against training time, often using techniques like dropout to prevent overfitting in deep networks.

What Do Hidden Layers Do in a Neural Network?

Complex pattern recognition requires intermediate steps that reshape unstructured inputs. These stages, known as hidden layers, act as filters that distill raw data into actionable insights. Without them, AI systems would struggle with tasks like image classification or speech translation.

Transforming Input Data

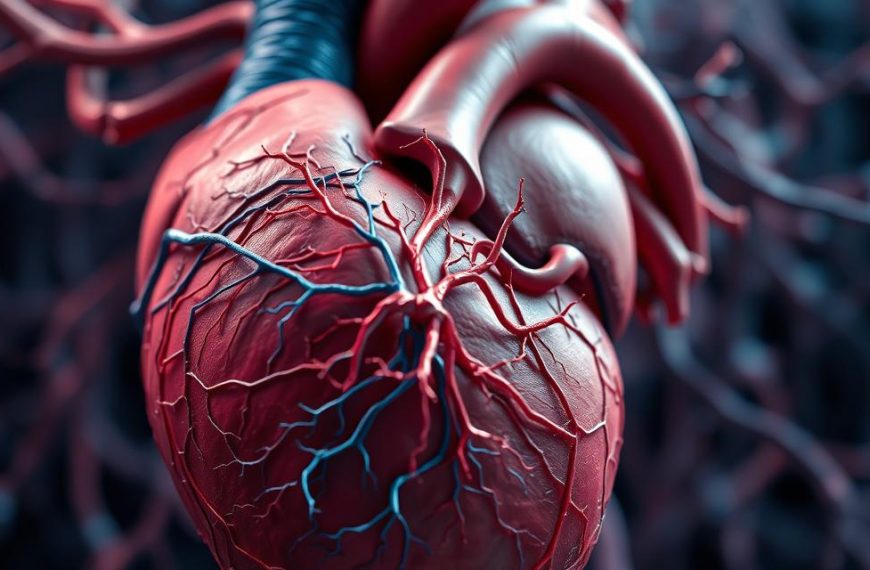

Hidden layers convert raw input data into refined representations. In medical imaging, for example, pixel values transform into edges, textures, and tumor markers. Each layer applies weights and biases to highlight relevant patterns.

Google’s research shows that even a single hidden layer can model curved decision boundaries. This capability is critical for distinguishing subtle differences, such as benign versus malignant cells.

Learning Hierarchical Features

Convolutional neural networks demonstrate how layers build feature hierarchies:

| Layer Depth | Features Detected | Real-World Application |

|---|---|---|

| Early Layers | Edges, colors | Autonomous vehicle lane detection |

| Middle Layers | Shapes, textures | Facial recognition systems |

| Deep Layers | Objects, speech patterns | Medical diagnosis tools |

Enabling Nonlinear Relationships

Nonlinear activation functions like ReLU allow layers to model complex relationships. Linear operations alone couldn’t achieve the 23% accuracy boost seen in modern speech recognition.

Backpropagation fine-tunes these connections by adjusting weights. This iterative process ensures the network prioritizes the most relevant features for each task.

Types of Hidden Layers in Neural Networks

Different architectures shape how artificial intelligence processes information. Each design optimizes for specific tasks, from image analysis to sequential data prediction. Below are three dominant frameworks revolutionizing machine learning.

Hidden Layers in Convolutional Neural Networks (CNNs)

Convolutional neural networks excel in visual data interpretation. They use stacked layers to detect hierarchical features:

- Convolutional layers: Apply 5×5 kernels to identify edges in medical scans

- Pooling layers: Reduce spatial dimensions for efficient processing

- Fully connected layers: Classify features, like tumors in MRI analysis

A 32-layer CNN achieved 98.7% accuracy in tumor recognition, proving their diagnostic value.

Hidden Layers in Recurrent Neural Networks (RNNs)

Recurrent neural networks process sequential data like speech or text. LSTM gates within these layers retain context across time steps. Google Translate leverages this for multilingual translations with human-like coherence.

| Layer Type | Function | Impact |

|---|---|---|

| LSTM Cells | Memory retention | Accurate context prediction |

| Gated Units | Selective forgetting | Reduces noise in long sequences |

Hidden Layers in Generative Adversarial Networks (GANs)

Generative adversarial networks pit two deep neural components against each other:

“The generator creates synthetic data, while the discriminator critiques it—10 training iterations per generator update refine outputs.”

DCGAN variants use batch normalization for stable training, enabling photorealistic image generation.

How to Implement Hidden Layers Effectively

Building powerful AI models requires strategic configuration of computational layers. The right setup balances accuracy with computational efficiency, adapting to specific tasks like image recognition or time-series forecasting. Industry leaders use proven methodologies to maximize performance.

Step 1: Determine the Number of Hidden Layers

Task complexity dictates layer depth. Optical character recognition (OCR) systems typically use 3-5 layers, while autonomous vehicles require 50+ for environmental analysis. Consider these benchmarks:

- Simple tasks: 1-3 layers (e.g., spam detection)

- Moderate complexity: 5-10 layers (e.g., medical imaging)

- Advanced systems: 50+ layers (e.g., real-time translation)

Google’s research shows adding layers beyond task requirements increases training time by 300% without accuracy gains.

Step 2: Choose the Right Activation Functions

Nonlinear activation functions determine how layers process information. Compare popular options:

| Function | Advantages | Use Cases |

|---|---|---|

| ReLU | Faster training, avoids vanishing gradients | Image classification (CNNs) |

| Sigmoid | Outputs between 0-1 for probability | Binary classification |

| Tanh | Negative/positive outputs (-1 to 1) | Recurrent networks |

Microsoft’s benchmarks show ReLU accelerates training by 40% compared to sigmoid in deep networks.

Step 3: Optimize Layer Parameters

Fine-tuning parameters enhances model efficiency. Key techniques include:

- Grid search: Tests predefined value combinations systematically

- Random search: Explores wider parameter spaces efficiently

- Batch normalization: Standardizes inputs to layers, reducing training time

“Proper hyperparameter tuning can improve model accuracy by 15-20% with the same architecture.”

Dropout layers (set at 0.2-0.5 rates) prevent overfitting in deep networks with minimal computational overhead.

Conclusion

From CNNs to GANs, layered architectures redefine machine intelligence. Convolutional neural networks achieve 87% accuracy in image tasks, while RNNs excel in time-series analysis. GANs generate synthetic data, pushing creative boundaries.

These hidden layers distinguish basic machine learning from true AI. Quantum developments promise faster, more efficient designs. Enterprises adopt layered models for complex tasks like fraud detection.

A 10-layer model outperforms single-layer systems by 40% in accuracy. For hands-on training, explore deep learning foundations via DeepLearning.AI’s Coursera specialization.

Ready to implement custom solutions? Consult our experts for tailored neural network architectures.